Gameboy Development Forum

Discussion about software development for the old-school Gameboys, ranging from the "Gray brick" to Gameboy Color

(Launched in 2008)

You are not logged in.

Ads

#76 2020-02-21 13:13:09

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

gekkio is talking about the Work RAM inside the Game Boy itself. You changed your equation for pin 25 for testing, which is what might be causing bus contention on the data lines.

Just to make sure: Did you not have any decoupling capacitors on the voltage translators? Your design does have footprints for them.

Offline

#77 2020-02-21 13:51:50

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

gekkio wrote:

I'm not surprised since you're causing bus conflicts and fighting with the work RAM. Your circuit is *not* safe for use with a real GB.

Now, idea number 2 is basically this: never have floating inputs when dealing with CMOS chips. The data bus between the GB (or flasher) and the transceiver seems to be ok, but your 3.3V-side data bus is not guaranteed to have a valid data value at all times. If you only have a flash chip in place and A15 is the flash chip CS, your 3.3V-side data bus inputs are floating at all times except when the address bus contains an address that is lower than 0x8000 and the flash chip is driving a value on the data bus.

Wow, thank you that is incredibly helpful. I didn't think there would be a bus conflict since the flash wasn't selected. I'm sure it's obvious, but this is my first cart using voltage translators...I hadn't even considered the fact that they were causing conflicts. It seems to run pretty great now!

I'll have to check out the 74LVC8T245. I was hopeful that since the 5v has to first be regulated to 3v3, the 5v side would always power on first. But, it would be nice to have the peace of mind with the 74LVC8T245.

Tauwasser wrote:

Just to make sure: Did you not have any decoupling capacitors on the voltage translators? Your design does have footprints for them.

For the breadboard, I didn't at first because I didn't have any through hole caps. I have some 0.1 uF caps in place now.

Now, I need to figure out why I can't erase the flash anymore. Sector protection is based on the DYB bits and the PPB bits. The DYB bits are volatile, so power cycling should take care of those. The PPB bits can only be erased via the PPB erase command, which I think I performed. Running the sector protect verify command returns 0x00 for sector 0. I wonder if the chip was damaged during the bus contention?

Last edited by WeaselBomb (2020-02-21 20:22:52)

Offline

#78 2020-02-22 15:38:42

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

Wow, thank you that is incredibly helpful. I didn't think there would be a bus conflict since the flash wasn't selected. I'm sure it's obvious, but this is my first cart using voltage translators...I hadn't even considered the fact that they were causing conflicts. It seems to run pretty great now!

There are two separate issues.

The first issue is that with you driving pin 25 (2OE) of the 74LVC8T245 using

Code:

OE <= '0' when (RD = '0' or WR = '0') else '1';

you will always drive the data bus when RD = '0'.

However, RD = '0' can be true even when the WRAM inside the Game Boy is supposed to drive the bus when it is being read from, so you must incorporate and address check in that logic that looks if either FLASH or SRAM (if populated) are actually selected through the address bits.

The second issue is that the data bus on the 3V3 side is floating when it is not being driven by the 74LVC8T245. Some technologies do not like this at all and most will usually have higher-than-usual power consumption (due to circuits inside the chips constantly switching between logical 0 and logical 1). The other address and control pins are always driven by the 74LVC8T245 chips so they are okay.

However, that shouldn't break things. You should probably activate the MachXO-256 internal pull-ups for the data bus, so it is always at a defined level.

WeaselBomb wrote:

For the breadboard, I didn't at first because I didn't have any through hole caps. I have some 0.1 uF caps in place now.

Oh, okay! That explains it. Yeah, decoupling is almost always needed!

WeaselBomb wrote:

Now, I need to figure out why I can't erase the flash anymore. Sector protection is based on the DYB bits and the PPB bits. The DYB bits are volatile, so power cycling should take care of those. The PPB bits can only be erased via the PPB erase command, which I think I performed. Running the sector protect verify command returns 0x00 for sector 0. I wonder if the chip was damaged during the bus contention?

Can you talk to the flash again and enter CFI or Autoselect command mode? I would worry about establishing basic communication again before trying to erase anything. I don't think you can lock the FLASH chip all the way that it does not accept any commands anymore.

If anything, bus contention might have damaged the second 74LVC8T245, because it was driving against the WRAM

Offline

#79 2020-02-24 21:19:35

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

Can you talk to the flash again and enter CFI or Autoselect command mode?

I was able to enter AutoSelect mode again, after pulling the data bus high using the FPGA's internal pull-ups. I'm not sure why that is, but for some reason it doesn't read the manufacturer + device IDs consistently without being pulled up. I assume that's the reason, anyway, since that's the only way it seemed to work. If there is another explanation, it is probably beyond me. Issuing an erase command doesn't seem to always work completely, like the first half of the addresses will be erased. I replaced the wires for A14, but that didn't seem to fix it.

It seems like no matter what I do, I can't get it to read/write Tetris now. I'm honestly wondering if the voltage translators are damaged, or if I'm just fighting against dodgy breadboard connections. I've replaced wires, flash chips, capacitors, nothing seems to work. I've powered up the 5v side first, as suggested, still no luck. It will get MOST of the 32 KB file correct, but EVERY byte that is wrong is 0xFF, which to me suggests it didn't write anything at all, like /OE wasn't asserted when it should have been? I've programmed the Atmega to output some of these 'faulty' address and data patterns, and it drives the pins just fine.

I'm fairly confident the circuit works as-is, now that the bus contention has been sorted out. Last week I was reading/writing Tetris just fine. The circuit boards I assembled don't really work, my reflow method didn't really pan out as I'd hoped. I'm tempted to go ahead and order a pre-assembled prototype board so I can finally start programming the RTC (what I've been dying to do). This is making me want to pull my hair out.

Last edited by WeaselBomb (2020-02-24 21:20:10)

Offline

#80 2020-02-29 17:23:31

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

It honestly sounds like your breadboard setup might be what's impeding you. Are there excessive cable lengths or something? Usually all the signals should be slow enough that they are settled before the relevant control signals are asserted. Just to be sure, the pull up on the reset pin is there and working correctly on the 3V3 side, right? Maybe you keep resetting the FLASH midway through some operation...

I take it that there is still no pattern to which bytes are wrong, right? Not every 2nd, 4th, 8th etc. Also, you reading 0xFF might be caused by a number of issues, not neccesarily because the FLASH is empty at that location. Maybe #OE or #CE on the FLASH are wonky, too?

Last edited by Tauwasser (2020-02-29 17:24:58)

Offline

#81 2020-03-01 01:48:30

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

The cables are pretty long, about 12 inches each.

I think I've narrowed down the problem to writes only, reads seem fine. I can read the file multiple times and it's always the same. After erasing, re-writing, and THEN reading the flash again, the results are different.

I wondered if I was just reading it back incorrectly, so I put it in a Gameboy, but that didn't read it either.

I tried doubling the time that /WR is asserted, but the problem still persists.

So far, I haven't been able to find any noticeable pattern, it only misses around 10 bytes at most for the whole file, and the addresses aren't the same every time.

I actually don't have a pull-up on /RESET...I didn't think one was necessary since it should always be driven? Or is it not always driven? I guess it won't matter on the PCB, since the FPGA should pull it up?

In other news, I was thinking it might be nice to add a battery backup IC to allow the use of SRAM, since it's way less expensive than FRAM, like $3 vs $30. The idea is that if FRAM is being used, then the IC can be left off. I'm curious what you think of this idea:

Here's the chip (U6):

I was trying to route the battery power to the IC without disturbing my ground pour too much:

I'm wondering if C18 is actually necessary for decoupling, since it isn't that far from the power source. The ground pour on the bottom-left corner of the FPGA looks messy to me, maybe it needs keepout lines to prevent an accidental island during production (or maybe just a via)?

Here's the link if you want to see it or mess around with it (I have a backup copy as well, don't worry).

Last edited by WeaselBomb (2020-03-01 02:02:57)

Offline

#82 2020-03-01 04:36:56

- gekkio

- Member

- Registered: 2016-05-29

- Posts: 16

Re: MBC5 in WinCUPL (problem)

Any chance for PDF schematics, at least if your breadboard setup isn't radically different? That would make debugging easier, since the problems are most likely not in layout but in the circuit itself.

RESET is pulled up on all Game Boys and well-designed flashers. Note that it's designed to be an "open drain" signal, so the cartridge can also pull it low if necessary (which all normal cartridges with RAM protection chips do!). But in your case you're using it as an input, so this detail doesn't really matter.

BTW, I noticed that on the board you have VIN connected directly to the bus transceiver. You should have a pull-up on the 5V side, because in normal conditions nobody is driving VIN and without a pull-up it'll be a floating input from the bus transceiver's point of view (which is bad, and the value is not guaranteed to be stable).

Offline

#83 2020-03-01 06:39:52

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

The cables are pretty long, about 12 inches each.

Whoa, 30cm is crazy long for what you're trying to do. You might be running into issues with reflections on the lines and cross-talk even at 1 MHz.

WeaselBomb wrote:

So far, I haven't been able to find any noticeable pattern, it only misses around 10 bytes at most for the whole file, and the addresses aren't the same every time.

So totally random, that's bad.

WeaselBomb wrote:

I actually don't have a pull-up on /RESET...I didn't think one was necessary since it should always be driven? Or is it not always driven? I guess it won't matter on the PCB, since the FPGA should pull it up?

You are correct. I was just thinking about the way the voltage translator works. It will tri-state its output pins when it perceives the 3V3 side to be off. Now in a situation like before without decoupling, that might happen in-between switching all outputs at the same time.

WeaselBomb wrote:

In other news, I was thinking it might be nice to add a battery backup IC to allow the use of SRAM, since it's way less expensive than FRAM, like $3 vs $30. The idea is that if FRAM is being used, then the IC can be left off. I'm curious what you think of this idea:

Here's the chip (U6):

https://i.imgur.com/44cYwQel.png

So this is some kind of Battery Backup IC? I really second gekkio's wish for an actual schematic.

I saw the one on your website for the Gameboy FRAM RTC Flash Cart, but that is not using any net labels, so it would be pretty hard to read as a PDF.

gekkio wrote:

RESET is pulled up on all Game Boys and well-designed flashers. Note that it's designed to be an "open drain" signal, so the cartridge can also pull it low if necessary (which all normal cartridges with RAM protection chips do!). But in your case you're using it as an input, so this detail doesn't really matter.

WeaselBomb, when you switch to SRAM, this detail might become important, as it would mean you need to do a few more changes.

gekkio wrote:

BTW, I noticed that on the board you have VIN connected directly to the bus transceiver. You should have a pull-up on the 5V side, because in normal conditions nobody is driving VIN and without a pull-up it'll be a floating input from the bus transceiver's point of view (which is bad, and the value is not guaranteed to be stable).

That's especially bad since that's the FLASH pin #WE. Is GBC different here from DMG? I notice that the official dev cartridges from Nintendo always have a pull-down but it's not populated on the later cartridges (e.g. DMG-B04).

So on a classic Game Boy there might be spurious writes with this setup, which is bad ![]()

Offline

#84 2020-03-01 12:56:37

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Whoops, sorry I thought I had the schematic in that dropbox link. Here is the new one. I will apologize in advance, it is probably very hard to read because it is so messy, I will work on getting that cleaned up. I don't know if you guys are using DipTrace, but it highlights the entire net when you mouse over a signal. I did however go in and label all of the nets, so there's that at least.

Last edited by WeaselBomb (2020-03-01 16:58:34)

Offline

#85 2020-03-01 14:06:00

- gekkio

- Member

- Registered: 2016-05-29

- Posts: 16

Re: MBC5 in WinCUPL (problem)

Consider adding a 10k pull-up to the flash #WP pin. It's not connected in the schematics, and while the datasheet says there's an internal pull-up, I couldn't find any information about its strength. In a noisy breadboard setup it could have an effect, but this is mostly a precaution. I'd probably tie it to 3V3 permanently in the actual board just to be safe.

What are the biggest differences in the breadboard setup? Are all upper addresses (A16-A22) of the flash chip tied to either GND or 3V3?

Where does 3V3 come from in the breadboard setup?

Offline

#86 2020-03-01 16:58:15

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

I've added a schematic of the breadboard setup to that link, hopefully its a bit easier to read than the other one. There should be a DipTrace file as well as a PDF.

Currently, #WP is tied to VCC, and A16-A22 are tied to ground. The data bus and /RESET are being pulled up internally by the FPGA.

gekkio wrote:

BTW, I noticed that on the board you have VIN connected directly to the bus transceiver. You should have a pull-up on the 5V side, because in normal conditions nobody is driving VIN and without a pull-up it'll be a floating input from the bus transceiver's point of view (which is bad, and the value is not guaranteed to be stable).

I see, I will add that to the PCB.

For the breadboard, the Audio pin is disconnected, to enforce the flasher to only use the ALG12 patterns. I will add the VIN pullup resistor to the PCB.

Tauwasser wrote:

WeaselBomb, when you switch to SRAM, this detail might become important, as it would mean you need to do a few more changes.

Are you referring to write-protecting the SRAM during reset? I may have to dig into that some more to know what needs to be done. I'm not sure how that is typically implemented.

EDIT:

Ah, so the reset ICs found in original carts (MM1134, BA6129, etc) output a reset signal if the voltage drops. They also have chip select outputs to disable writes to RAM. I'll have to see if I have any carts here, I don't recall those ICs actually controlling RAM /CE. Isn't the MBC the only thing that does that?

Just spitballing, but I'm wondering if I could tie CE2 (active high) to the 3v3 rail, and connect VCC to the Vout of the backup IC. That way, when power is removed, CE2 falls, which should disable RAM? there's no chance for it to float if the 3v3 rail is off, right?

Last edited by WeaselBomb (2020-03-02 19:23:27)

Offline

#87 2020-03-07 21:34:43

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Swapped out voltage translators, and now I'm noticing a specific pattern. The only bytes that are incorrect are 0x7F when it should be 0xFF. Sometimes it writes 0xFF correctly, sometimes it doesn't. But when it doesn't, it is ALWAYS 0x7F.

Next, I decided to erase the flash, then write an 'Empty' file to it, containing 32KB of only 0xFF.

After reading the flash, I noticed the same issue happened again, but 0x7F was only written to addresses ending in 1, 5, 9, and D.

1 = 0001

5 = 0101

9 = 1001

D = 1101

All of these nybbles have 2 common bits: A0 and A1. I'm not sure what to make of this, maybe a hardware problem with the flasher?

I erased the flash and performed the same test again. The resulting read was NOT the same as the first test, but the incorrect values were still limited to addresses ending in 1, 5, 9, and D.

This whole thing seems really bizarre, unless I'm missing something obvious. If anybody wants to see the 32KB file I read from the chip, I placed it in the dropbox location I linked earlier.

Offline

#88 2020-03-08 08:30:27

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Okay, so at least you now have a specific -- even if bizzare -- pattern to look for. This is still the breadboard with the shared #WR for FLASH and the MachXO, right?

Did you confirm that it's the writing that causes this? After erase, the flash should also contain 0xFF everywhere, so reading it should produce the same result as your empty file.

What were the results you read the second time around? Completely unrelated values?

A few theories come to mind:

1) The erase/programming timeouts are hard-coded in the software, see wait_program_flash. In default mode, the flasher will wait 55us for byte program. However, Table 18.1 resp. 73 in the datasheet indicates a typical time of 150us. The software will wait ~5.1s on chip erase when the datasheet indicates a typical time of 38.4s. However, default mode is not used by default (*heh*).

Usually the reader software sets it to use toggle mode, but maybe there is an issue there of some sort? Try -datapoll.

2) A0 is connected to A-1, so check that connection and also the BYTE# pin. Maybe the reason addresses 3, 7, B, E don't show up that way is because they're the low byte at even addresses (An..A0) and since you write the same data to every byte, which happens to be the value after eras, you would neither see repeated values nor values that were not written at all right now.

3) I have at times had trouble with breadboards that had glue on the back. It turned out that the glue acted like a capacitor at times. Maybe the problem is just that D7 is "slower" than the rest of the I/O pins for some reason?

Honestly though, at this point it feels like it could be a systemic problem with your breadboard setup and the troubles might just go away with the real PCB.

Offline

#89 2020-03-08 17:17:59

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Well, today was interesting. I discovered that my flasher's Audio pin doesn't work. The connection is there, but there is no continuity. It hasn't been a problem yet, because I've been using /WR instead, for the time being. This made me suspicious, so I tried out the GBxCart flasher that just came in the mail. Surprisingly, it worked on the first try.

Still not sure why the first flasher is having problems, it will require some studying. Maybe you're right, and it is caused by the write timings. The chip erase is definitely working, the entire flash gets initialized to 0xFF. As far as I can tell, reads work perfectly, too. Maybe I broke the Atmega during the bus contention?

Regardless, having SOMETHING that seems to work is such a relief for the time being. The GBxCart firmware/software is open-source, so maybe I can see if it uses different timings.

I'll keep playing with it and see if it works consistently, and maybe then I can finally move on and order the PCB. We got a hot air soldering station at work, and I think I'll have much better success using that next time.

Last edited by WeaselBomb (2020-03-08 18:59:48)

Offline

#90 2020-03-19 18:05:16

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

I've been using a breakout board from Kitsch Bent to develop this flash cartridge, and I didn't think to inspect it since it's about 2 years old now. Well, I realized what I thought was a pullup resistor on AIN was actually a pulldown! And there is a capacitor on the AIN trace, which I also don't understand. I ordered a new board and assembled it today, without populating the pulldown resistor or capacitor.

After popping the new board into the flasher, it works perfectly. The original flasher that I thought wasn't working, is actually working fine. It reads and writes Tetris like a pro now.

One question, though. How do I know if a flash chip requires 12-bit or 16-bit for the unlock algorithm? My S29GL064 wouldn't work with the AIN pin until I used the -12bit parameter with GB Cart Flasher, but I'm not sure why.

I see the main difference is that ALG12 in the firmware has 3/4 hex characters used (0x0AAA), while ALG16 has 4/4 hex characters used (0x5555). So is the difference the length of the address? Or is it even considered an address, because it seems more like an instruction?

EDIT:

I guess the ALG-16 patterns need to be shifted before they will work with this chip, don't they? I think you said earlier that Wenting only shifted the ALG-12 patterns.

Now that I'm using AIN, I have to include that in the /OE equation right?

So it would be like this?

Code:

OE <= '0' when (RD = '0' or WR = '0' or AIN = '0') and (ADDR(15) = '0' or RAMCS = '0') else '1';

Last edited by WeaselBomb (2020-03-19 19:56:10)

Offline

#91 2020-03-19 22:23:53

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

I've been using a breakout board from Kitsch Bent to develop this flash cartridge, and I didn't think to inspect it since it's about 2 years old now. Well, I realized what I thought was a pullup resistor on AIN was actually a pulldown! And there is a capacitor on the AIN trace, which I also don't understand. I ordered a new board and assembled it today, without populating the pulldown resistor or capacitor.

Wow, sometimes it's the unlikeliest things ![]() So I think this board was made with the AIN pin used by DMG in mind, so to not influence sound it was always pulled low and a capacitor was probably added to stabilize.

So I think this board was made with the AIN pin used by DMG in mind, so to not influence sound it was always pulled low and a capacitor was probably added to stabilize.

WeaselBomb wrote:

One question, though. How do I know if a flash chip requires 12-bit or 16-bit for the unlock algorithm? My S29GL064 wouldn't work with the AIN pin until I used the -12bit parameter with GB Cart Flasher, but I'm not sure why.

I see the main difference is that ALG12 in the firmware has 3/4 hex characters used (0x0AAA), while ALG16 has 4/4 hex characters used (0x5555). So is the difference the length of the address? Or is it even considered an address, because it seems more like an instruction?

EDIT:

I guess the ALG-16 patterns need to be shifted before they will work with this chip, don't they? I think you said earlier that Wenting only shifted the ALG-12 patterns.

It's more of the other way around: The ALG16 pattern will always work, because the ALG12 usually ignores the upper address bits. However, there might be certain chips out there which do not ignore them, so that's why the ALG12 pattern is included anyway. If you shift the ALG16 pattern, that will work too.

However, remember that the firmware only support the following cases:

Code:

Method | Mapper || ALG16 | ALG12 -------+--------++-------+------ AIN | None || ✔ | ✔ AIN | MBCx || ✘ | ✔ #WR | None || ✘ | ✔ #WR | MBCx || ✘ | ✔

Basically, the firmware only support ALG16 for a directly connected flash chip, but uses ALG16 in AIN mode anyway, because many if not most ALG12 chips will work just as well.

I'm not sure why the AIN case does not support ALG16 with a regular MBC, as writes to the MBC would not interfere with the flash commands. Unlike the #WR method, which would always interfere.

WeaselBomb wrote:

Now that I'm using AIN, I have to include that in the /OE equation right?

So it would be like this?Code:

OE <= '0' when (RD = '0' or WR = '0' or AIN = '0') and (ADDR(15) = '0' or RAMCS = '0') else '1';

Right, you have to include AIN, however just checking RAMCS is not enough as it's asserted for WRAM as well -- basically everything but VRAM and some HIRAM, I think. Gekkio measured it and put it up on his blog, I think. Instead, check for the proper range of ADDR(15 downto 13) = "101" so you know it's SRAM.

Regards,

Tauwasser

Offline

#92 2020-03-20 18:29:23

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

however just checking RAMCS is not enough as it's asserted for WRAM as well -- basically everything but VRAM and some HIRAM

Oh, I thought it was only asserted for cart RAM. I knew /CS (cart pin 5) was asserted for those ranges, but not RAMCS. Thanks for clearing that up.

So far, I've ONLY been using the FPGA to drive /OE, so I could narrow down my hardware issues.

Now that those seem to be fixed, I guess the next steps to take are:

1. Drive RA14 using the FPGA

2. ROM Bank switching

3. Add RAM + Ram Bank Switching

4. RTC

Code:

entity MBCx_src is

Port

(

RD : in std_logic;

WR : in std_logic;

AIN : in std_logic;

ADDR : in std_logic_vector(15 downto 12);

DATA : in std_logic_vector(1 downto 0);

RESET : in std_logic;

RA : out std_logic_vector(15 downto 14);

OE : out std_logic

);

end MBCx_src;

architecture Behavioral of MBCx_src is

signal RomBankReg : std_logic_vector(1 downto 0);

signal RomBankClk : std_logic;

begin

OE <=

'0' when (RD = '0' or WR = '0' or AIN = '0') and ADDR(15) = '0'

else '1';

RA <=

"00" when (ADDR(14) = '0' or RESET = '0')

else RomBankReg when (RomBankReg /= "00")

else "01";

RomBankClk <=

'0' when (ADDR = "0010" and WR = '0')

else '1';

RomBank : process(RESET, RomBankClk)

begin

if(RESET = '0') then

RomBankReg <= "00";

elsif(rising_edge(RomBankClk)) then

RomBankReg <= DATA;

end if;

end process RomBank;

end Behavioral;EDIT:

Driving RA14 with FPGA seemed to work fine, so next I'll try ROM bank switching with Super Mario Land.

It reads and writes Super Mario Land perfectly, but I can't seem to get it to play on my DMG. It boots, but the image is definitely wrong, and starting the game causes an instant crash. I tried Tetris, too, and it seems to be experiencing the same issue. Looks like I still have some work to do before moving on.

Last edited by WeaselBomb (2020-03-20 21:21:57)

Offline

#93 2020-03-21 15:57:31

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

Tauwasser wrote:

however just checking RAMCS is not enough as it's asserted for WRAM as well -- basically everything but VRAM and some HIRAM

Oh, I thought it was only asserted for cart RAM. I knew /CS (cart pin 5) was asserted for those ranges, but not RAMCS. Thanks for clearing that up.

Wait, now I'm confused. I thought pin 5 was what you meant by RAMCS. Did you mean the pin that directly goes to the CS pin of the SRAM chip?

That pin is usually driven by an address decoding logic and gated through an external Backup IC (MM1026A, MM1134A etc.) in case the SRAM only has one chip select pin or connected to the low-active chip select pin where the high-active chip select pin is driven by the Backup IC exlusively.

Different MBCs use different logic for this. For instance MBC1 will use:

Code:

RAM_CS_N <= '0' when (CS_N = '0' and A(14) = '0' and ram_enable_r = x"A") else

'1';Decoding the complete upper address bits (A15..A13) is safest though.

WeaselBomb wrote:

It reads and writes Super Mario Land perfectly, but I can't seem to get it to play on my DMG. It boots, but the image is definitely wrong, and starting the game causes an instant crash. I tried Tetris, too, and it seems to be experiencing the same issue. Looks like I still have some work to do before moving on.

Just to make sure: Now you did add a pull-up on the AIN line, right? If it floats, that might spell some trouble. What do you mean by "the image is definitely wrong"?

Tetris only ever writes the ROM bank register once to set it to 0x01, so the ROM bank should stay fixed after initialization for the complete duration of the game.

Regards,

Tauwasser

Last edited by Tauwasser (2020-03-21 16:02:39)

Offline

#94 2020-03-24 18:02:49

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

Just to make sure: Now you did add a pull-up on the AIN line, right? If it floats, that might spell some trouble.

I have AIN pulled up on the 5v side through a 10K resistor. I shouldn't need one on 3v3 side since the voltage translator never changes direction of AIN, right?

Tauwasser wrote:

What do you mean by "the image is definitely wrong"?

On Tetris, the top line of the title screen looks like this:

It doesn't happen every single time, though, which makes me wonder if a signal is floating.

When I change the VHDL code to not use any ROM banking, the issue disappears:

Code:

RA <=

"00" when ADDR(14) = '0' or RESET = '0'

else "01";but adding ROM banking causes it to reappear:

Code:

RA <=

"00" when (ADDR(14) = '0' or RESET = '0')

else RomBankReg when (RomBankReg /= "00")

else "01";

RomBankClk <=

'0' when (ADDR = "001" and WR = '0')

else '1';

RomBank : process(RESET, RomBankClk)

begin

if(RESET = '0') then

RomBankReg <= "00";

elsif(rising_edge(RomBankClk)) then

RomBankReg <= DATA;

end if;

end process RomBank;I'm only using D0 and D1 for the time being, to try and get 64KB games working. I wonder if the internal pullups on the FPGA could be affecting D0 and D1 somehow, like if the wire length is too long, then the voltage translator can't drive those signals low quickly enough? Either way, I'm suspicious that the Rom Bank isn't being set correctly.

EDIT:

Ok, it's something super simple.

If 64 KB games use 4 banks, then the address ranges of each bank would be:

0: 0000 - 3FFF

1: 4000 - 7FFF

2: 8000 - BFFF

3: C000 - FFFF

After flashing, Banks 0 and 1 are perfect (which explains why Tetris flashed just fine, still not sure why it didn't work on Gameboy though).

In Bank 2, addresses 8100-BFFF have incorrect values.

In Bank 3, addresses C100-FFFF have incorrect values.

In Bank 2, addresses 8100-BFFF perfectly match Bank 1 addresses 4100-7FFF.

In Bank 3, addresses C100-FFFF match Bank 1 addresses 4100-7FFF.

I'm not sure why Bank 2 and 3 start copying from Bank 1 after 0x100 (256) bytes. The only thing that can reset the ROM bank is driving RESET or A14 low.

Replaced RESET and A14 wires, now it seems to flash correctly, but still crashes on Gameboy.

Last edited by WeaselBomb (2020-03-25 16:32:14)

Offline

#95 2020-03-25 17:54:00

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Have you looked at the way your clock is implemented after place & route? Usually the fabric uses dedicated clocking resources and when the software encounters a clock generated from combinational logic, it will try to fake the behavior as best as it knows how. Usually that means the relevant signals that would make up the clock signal as well as the flip flop's output is fed into a look-up table, which tries to simulate the behavior of the asynchronous (w.r.t. the system clock) combinational clock. This might mean the flip flops are constantly enabled and the D input is constantly being save to the Q output of each flip flop. Now, if there is a timing mismatch with the individual bits that make up the combinational clock and the system clock, the wrong bit might end up getting stored inside the register.

I think you should try to modify your VHDL. Either carefully craft that the relevant combinational logic output signals use dedicated clocking resources (they can be fed from fabric) or convert them to enable logic and use the internal clock (I think you did not include any external crystal) to clock all registers. By default the internal clock is 2.08 MHz, but it can be changed to higher frequencies. You would want to at least have twice the frequency of your signals (4.194304 MHz / 4 ~ 1.048576 MHz) to perform an edge detection. However, you probably want to be a lot faster than that to not negatively impact the propagation delay as seen from the Game Edge Connector.

You might also need to look into adding more timing constraints to specify delays from pin to first flip flop and last flip flop to output pin or such. I'm not familiar with Lattice Diamond, but there is a fairly thorough user guide as well as a tutorial that covers part of it. If you have not worked with timing constraints for your design until now, then you're really lucky to have gotten it to do anything you wanted ![]() So don't give up just yet.

So don't give up just yet.

Regards,

Tauwasser

Offline

#96 2020-03-26 13:15:02

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Unfortunately I don't think I quite understand all of that ![]() that's ok, just more research I have to do.

that's ok, just more research I have to do.

So it seems I just got lucky with the CPLD working without specifying any timing constraints? I had hoped the FPGA would work similarly...

Tauwasser wrote:

carefully craft that the relevant combinational logic output signals use dedicated clocking resources

I'm not entirely sure what this means or how to do this manually, but it SOUNDS like the better solution.

Does this mean I need to force it to NOT use lookup tables? I'm not sure what dedicated clocking resources are.

Right now, I am more interested in this solution, but that might just be because I don't fully understand it yet.

Tauwasser wrote:

or convert them to enable logic and use the internal clock

Let me try to break that down a bit.

Let's call the internal clock signal CLK. I could use CLK signal as the D input, and the combination logic as the Enable?

As long as CLK is fast enough (at least twice as fast as PHI), when the flip-flop gets enabled by the combination logic, it will always see at least one rising edge of CLK?

Last edited by WeaselBomb (2020-03-26 17:45:01)

Offline

#97 2020-03-26 18:08:07

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

Unfortunately I don't think I quite understand all of that

that's ok, just more research I have to do.

Yeah, getting into CPLD and FPGA design is work in its own right.

The MachXO2 family datasheet is the best starting point for you right now.

However, remember that this might all just be a shaky wire in your setup, so double and trople check everything, before going on this journey ![]()

WeaselBomb wrote:

Tauwasser wrote:

carefully craft that the relevant combinational logic output signals use dedicated clocking resources

I'm not entirely sure what this means or how to do this manually, but it SOUNDS like the better solution.

Does this mean I need to force it to NOT use lookup tables? I'm not entirely sure what dedicated clocking resources are.

Right now, I am more interested in this solution, but that might just be because I don't fully understand it yet.

This is usually the worse of the two solutions, because you can only have a limited number of clocks in your system.

There are also other issues I quickly go into below.

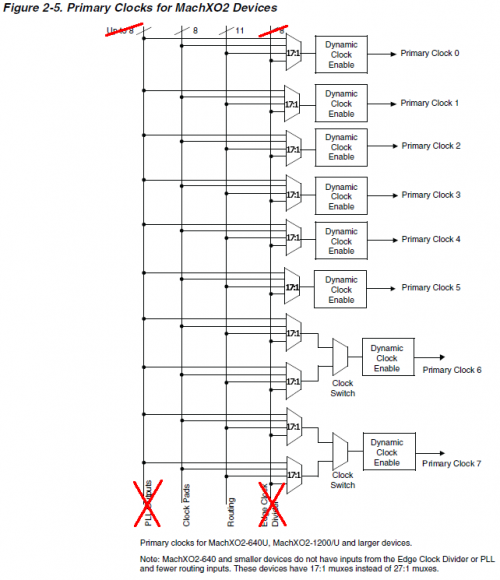

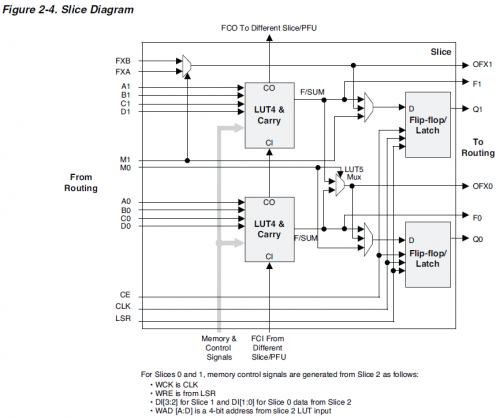

For the MachXO2-256 that's 8 primary clock lines:

Usually, you want to have a suitable system clock and source synchronous logic, so all logic paths are deterministic.

These clock lines are what feed into the individual slice registers:

WeaselBomb wrote:

Tauwasser wrote:

or convert them to enable logic and use the internal clock

Let me try to break that down a bit.

Let's call the internal clock signal CLK. I could use CLK signal as the D input, and the combination logic as the Enable?

As long as CLK is fast enough (at least twice as fast as PHI), when the flip-flop gets enabled by the combination logic, it will always see at least one rising edge of CLK?

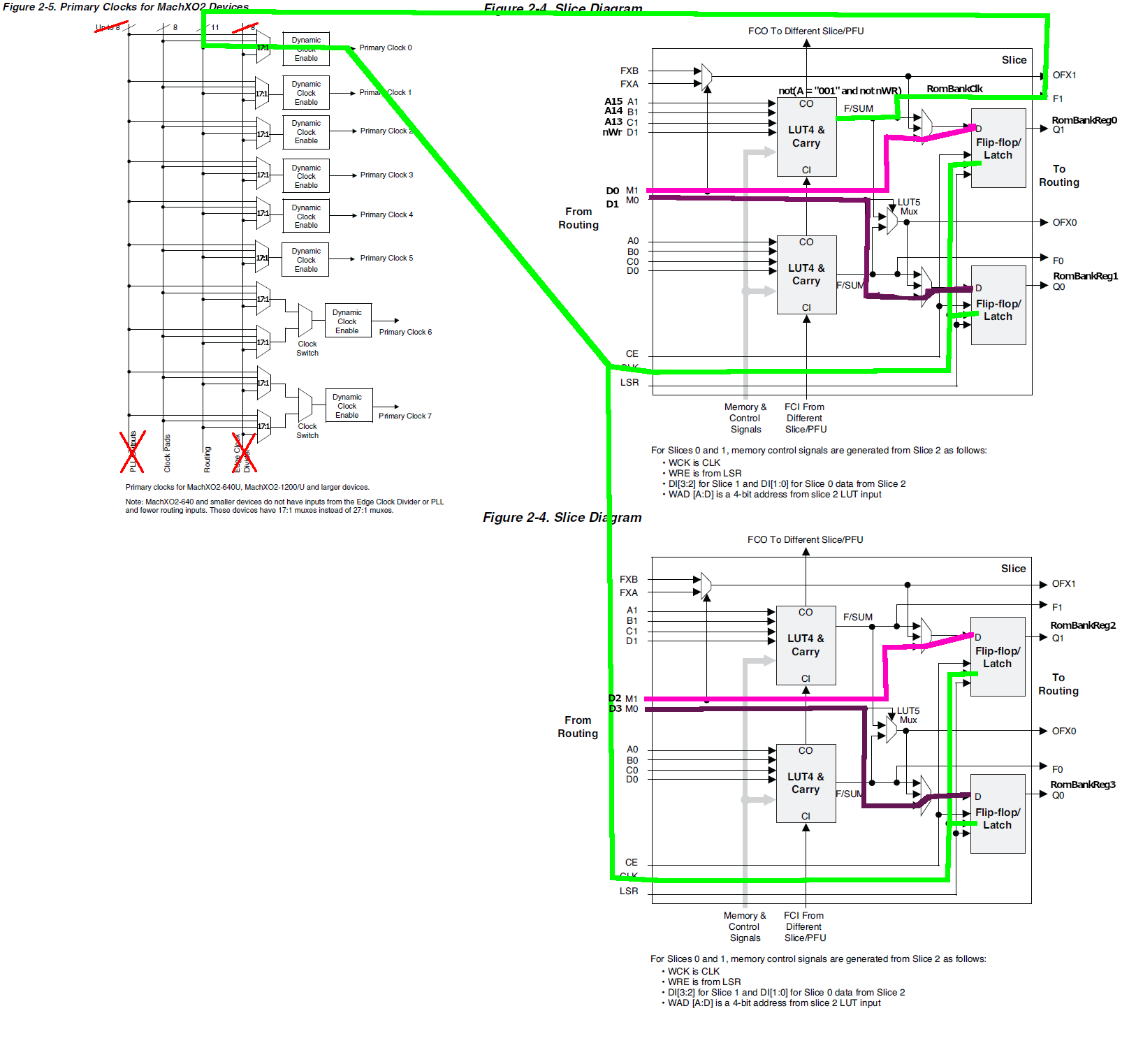

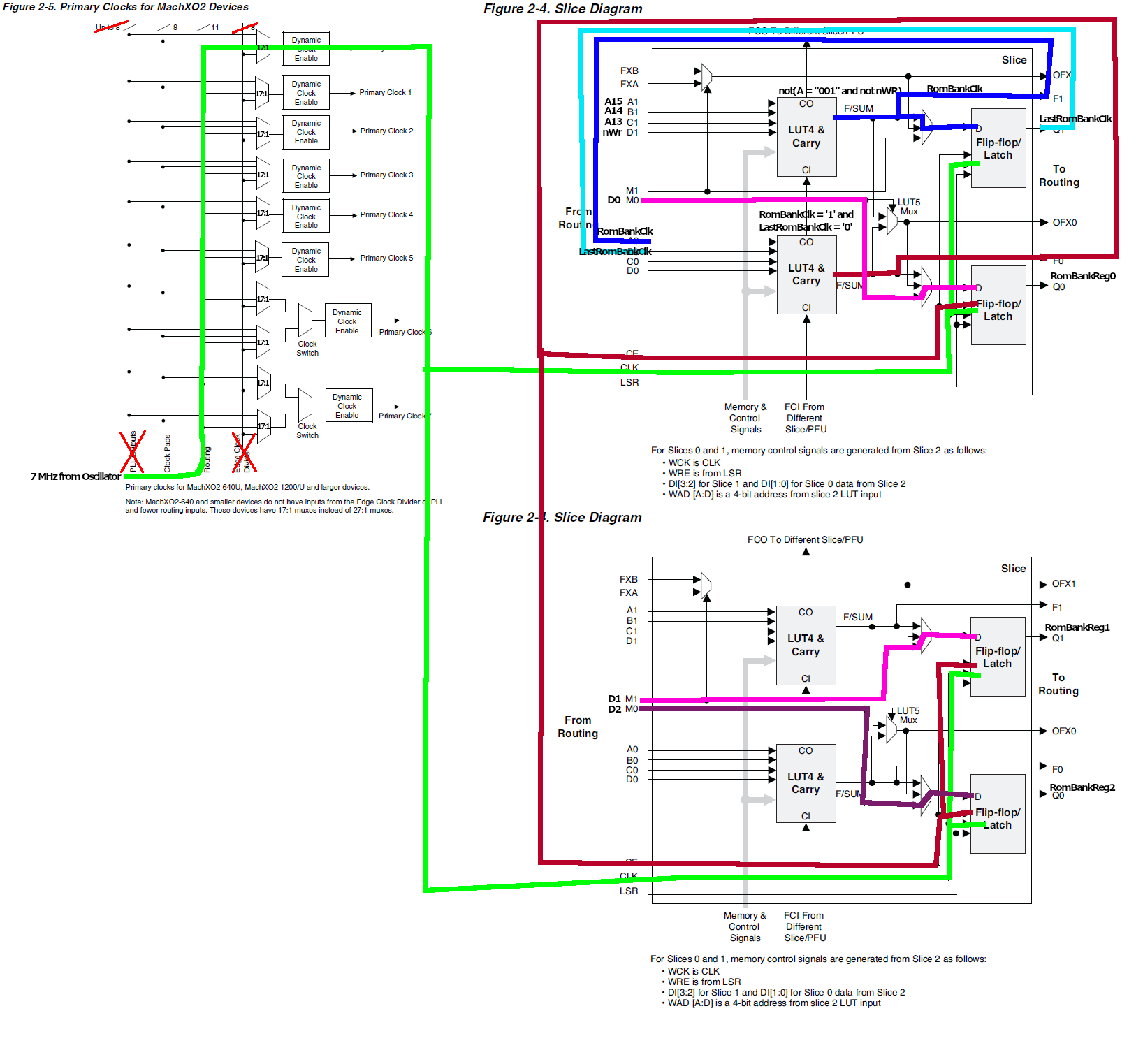

Well, kinda. The idea is to detect the edge on the "clock" that is made from the combinational logic "A = "001" and WR = '0'. You can synchronize the inputs and detect the cross-over from '0' to '1', which will serve as your enable.

Ignoring the synchronization logic for now, I drew quick diagrams of both approaches:

Method A would be the completely combinational clock approach:

Method B would be the edge detection approach (ignore missing D3, I did not want to draw it due to size):

The second is almost always preferred, because it has deterministic delays, so the routing can guarantee timing closure.

Method A might be an option for you, though. Usually, when you have at least one clock signal defined, Place & Route tools will try to transform a description of method A into method B automagically (with varying results). But since you don't have any real periodic clock signal in your current design, I don't think your tool will have done that. This was what I was getting at before.

Now basically, image you have method A currently and the path lengths of D0, D1, D2, D3 vary wildly (because they are unconstrained).

Then depending on the path length of the combinational clock signal, you will not actually sample D0..D3 at the same time.

Now, you would usually not have a problem with slow signals, because the setup and hold times should not be violated.

For high-speed signals and transients on the other hand, you really need to look at the setup and hold times. Basically, if this was the case, you should not see a deterministic pattern for now. But it's a case to consider overall.

Depending on the exact logic your design tool inferred, you might be running into some stability issues when you sample signals right on their transition.

Basically, since you feed a clock directly from the look-up table, there is actually no telling how often the look-up tables output will go from 0 to 1 and 1 to 0 while its inputs change. So you might have generated a few very high-speed clock edges on your inferred RomBankClk signal. That's why generating clocks this way is generally a bad idea. With the right constraints and slow sampling of inputs, you might get away with it. These clock glitches, if they have a high frequency, can however influence your flip flops and make them go into weird states, because the CPLD fabric was not designed for them. Then you might encounter wrongly set bits, bits that take unusually long to settle etc.

Usually, without specialized look-up tables that guarantee only one edge during their settling time it's not really possible to derive a usable clock signal from inputs they way you have done.

However, the good news is that using method B is much simpler. You would need to synchronize the inputs (A15..A13, D7..D0, #WR etc.) to the right clock domain beforehand (basically, two flip flops in a row per input). However, using a reasonably fast clock, you can easily do this without using too much power and adding too much delay. Then everything is source synchronous to your internal oscillator clock and once you constraint the timing, you can guarantee pin to first flop and last flop to output delays and from there reliably calculate the overall propagation delay.

I hope this helps you better understand the issues I was referring to.

As I mentioned above, you should check your setup beforehand, read up on your MachXO2, inspect the output of the design tools and try to understand what it did with your VHDL.

Offline

#98 2020-05-05 02:13:32

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

It's not much, but today I actually got the internal oscillator working. Took a few hours, but I finally have a blinking LED. Coming from C# to this is such a headache...

The MachXO datasheet says the oscillator runs from 18 to 26 MHz, but I haven't figured out how to select the frequency, or if it's even possible. But if it runs at least 18, that should be fast enough I think.

So now that I have a working signal from my oscillator, hopefully I should be able to use that to build synchronous logic.

Last edited by WeaselBomb (2020-05-05 02:14:27)

Offline

#99 2020-05-06 17:31:53

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser, you are the MAN!

I synchronized my inputs using my FPGA clock, and Super Mario Land runs now!

It will still crash at random times, but at least it boots.

I still have to add the clock enables, but this is already pretty exciting.

Code:

architecture Behavioral of MBCx is

-- HARDWARE INSTANTIATION

COMPONENT OSCC

PORT(OSC : OUT std_logic); --internal oscillator, runs at a fixed frequency anywhere between 18-26 MHz

END COMPONENT;

-- SYSTEM CLOCK

signal SYS_CLK : std_logic; --everything will be synchronized to this signal

--VARIABLES

type ADDR_SYNC is array (1 downto 0) of std_logic_vector(15 downto 13); -- used for syncing ADDR

type DATA_SYNC is array (1 downto 0) of std_logic_vector(1 downto 0); -- used for syncing DATA

subtype SYNCHRONIZER is std_logic_vector(1 downto 0); -- used for syncing single signals

-- INPUT SYNCHRONIZERS

signal sWR : SYNCHRONIZER; -- WR

signal sRD : SYNCHRONIZER; -- RD

signal sAIN : SYNCHRONIZER; -- AIN

signal sADDR : ADDR_SYNC; -- ADDR

signal sDATA : DATA_SYNC; -- DATA

-- REGISTERS

signal RomBankReg : std_logic_vector(1 downto 0);

-- CLOCKS

signal RomBankClk : std_logic;

signal LastRomBankClk : std_logic;

begin

osc0 : OSCC

Port Map (OSC => SYS_CLK);

RomBankClk <=

'0' when sWR(1) = '0' and sADDR(1) = "001"

else '1';

RA <=

"00" when (ADDR(14) = '0' or RESET = '0')

else RomBankReg when (RomBankReg /= "00")

else "01";

OE <=

'0' when (RD = '0' or WR = '0' or AIN = '0') and ADDR(15) = '0'

else '1';

SyncInputs : process(RESET, SYS_CLK)

begin

if(RESET = '0') then

sWR <= "00";

sADDR <= ("000","000");

elsif(rising_edge(SYS_CLK)) then

-- synchronize WR

sWR(0) <= WR;

sWR(1) <= sWR(0);

-- synchronize ADDR

sADDR(0) <= ADDR;

sADDR(1) <= sADDR(0);

LastRomBankClk <= RomBankClk;

end if;

end process SyncInputs;

RomBank : process(RESET, SYS_CLK)

begin

if (RESET = '0') then

RomBankReg <= "00";

elsif (rising_edge(SYS_CLK)) then

if(LastRomBankClk = '0' and RomBankClk = '1') then

RomBankReg <= DATA;

end if;

end if;

end process RomBank;

end Behavioral;EDIT:

Added clock enable to RomBank register.

Tauwasser wrote:

Then everything is source synchronous to your internal oscillator clock and once you constraint the timing, you can guarantee pin to first flop and last flop to output delays and from there reliably calculate the overall propagation delay.

In Lattice software, I can view Timing Preferences, and I see 2 fields that interest me: INPUT SETUP, and CLOCK TO OUT. I'm guessing this refers to 'pin to first flop' and 'last flop to output'?

I don't understand how to find the correct values for these fields, though, and I'm still not sure how to constrain the timing.

Last edited by WeaselBomb (2020-05-07 16:06:05)

Offline

#100 2020-05-07 16:12:13

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

Tauwasser, you are the MAN!

I synchronized my inputs using my FPGA clock, and Super Mario Land runs now!

That's great to hear!! I'm happy for you ![]() Kinda feared you had given up on this, to be honest, which would have been a shame

Kinda feared you had given up on this, to be honest, which would have been a shame ![]()

WeaselBomb wrote:

It will still crash at random times, but at least it boots.

I still have to add the clock enables, but this is already pretty exciting.

Nice. You'll probably need to dig a bit to find out why the random crashes occur, but it's progress ![]()

For a power-sensitive application you might want to look into scaling the oscillator frequency down, but definitely get it to work first and then optimize ![]()

WeaselBomb wrote:

Tauwasser wrote:

Then everything is source synchronous to your internal oscillator clock and once you constraint the timing, you can guarantee pin to first flop and last flop to output delays and from there reliably calculate the overall propagation delay.

In Lattice software, I can view Timing Preferences, and I see 2 fields that interest me: INPUT SETUP, and CLOCK TO OUT. I'm guessing this refers to 'pin to first flop' and 'last flop to output'?

I don't understand how to find the correct values for these fields, though, and I'm still not sure how to constrain the timing.

Check out Lattice's Timing Closure Guide to find out what they mean exactly. The CLOCK_TO_OUT is basically what I meant with "last flop to output", i.e. clock edge arrives at flip flop: how long does it take until output is visible on pin.

The INPUT_SETUP would be relevant if you captured the external signals using an external clock (such as PHY), as it specifies the amount of time before the external clock edge when data is already valid.

For your use case, you asynchronously capture data, so you should look at the MAXDELAY constraint instead. There you can constraint the delay from pin to first flip flop (your sADDR/sWR/sDATA(0) synchronizer flip flop).

I also want to point you to Xilinx' Timing Closure User Guide, because the Lattice documentation lacks a proper introduction. You might like to read chapters 2 and 3 as they contain really great graphics regarding the different timing constraints there are and what part they really constraint. The translation to what Lattice's tools offer is not 1:1, but it should give you a better overview of what the problem is and what different solutions might exist.

Last edited by Tauwasser (2020-05-07 16:12:52)

Offline