Gameboy Development Forum

Discussion about software development for the old-school Gameboys, ranging from the "Gray brick" to Gameboy Color

(Launched in 2008)

You are not logged in.

Ads

#101 2020-05-07 22:32:31

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Thank you for those resources, I haven't stopped reading them since you posted them. I have been reading over chapter 2 quite a bit, and want to clarify a few things...

Asynchronous clock domains are those in which the transmit and capture clocks bear no frequency or phase relationship.

Ok, this sounds like my use case. All of my inputs have transmit clocks that have no relationship with SYS_CLK.

Because the clocks are not related, it is not possible to determine the final relationship for setup and hold time analysis. For this reason, Xilinx recommends using proper asynchronous design techniques to ensure that the data is successfully captured.

This re-iterates what you said earlier about setup time being irrelevant for my inputs. I am using synchronizers to ensure my inputs are captured successfully, but does this actually ensure it? Even with a 2-FF synchronizer, can't meta-stability still happen? The Mean Time Between Failures (MTBF) might be extremely long, but it could still fail at any time, couldn't it? Would it be better to use a different technique, like FIFO?

It then describes how to define the MAXDELAY of the data path:

1. Define a time group for the source registers

2. Define a time group for the destination registers

3. Define the maximum delay of the net

This is where I am uncertain. So if I wanted to set the MAXDELAY of my LastRomBankClk signal, my source would be the WR and ADDR pins, and the destination would be LastRomBankClk?

Would I group all of the input pins together in one time group? And all of the first flip-flops (sWR(0), sRD(0), etc) in another time group?

This Xilinx forum seems to suggest that asynchronous inputs are typically left unconstrained. Does this mean I should ignore MAXDELAY for pin to first flip-flop for my synchronized inputs? for example, WR -> sWR(0)?

Last edited by WeaselBomb (2020-05-08 12:44:50)

Offline

#102 2020-05-08 19:59:04

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Constraining is kind of like an art form, really. The post specifically talks about purely combinational paths, which indeed cannot be constrained w.r.t. a certain clock. The fabric logic is usually fast enough that it does not really matter in that case.

However, if you leave all asynchronous paths unconstrained, the path the input signal takes to the first flip flop might be longer for one signal than for the other.

So for instance, if you check address pins and the A15 path takes 60ns longer than the A14 and A13 signals, you might see 0b000 when the input is really 0b100 for one clock cycle and so on. Generally you want related signals to have a defined propagation delay between parts of your design (pcb, within FPGA etc.).

The place & route logic tends to push everything into the middle of the FPGA if there are no constraints, because that's where the H-tree of the clock originates in most architectures and hence the clock delays within the fabric are the smallest. This means your asynchronous signal would need to travel a long distance through the FPGA taking whichever route the place/route algorithm chooses—which is non-deterministic, so you might end up with a design that works, complete the build process again, and have a design that fails timing ![]() .

.

As the one user in the forum wrote, it's usually best to simply use on the IOB (I/O b̶u̶f̶f̶e̶r̶ block) flip flops to capture any incoming signal and then put a number of flip flops to synchronize the signals after it.

As for your meta-stability question: Yes, it can happen and longer chains are better but introduce additional delay. Two flip flops are the bare minimum. If the first flip flop captures the signals as it is changing (and hence a setup-/hold-time violation occurs), it might become meta-stable and take a long time to settle. However, the logic thresholds (on the input side) of the second flip flop will constrain its output to 0 or 1 regardless on the next clock cycle (unless an even unlikelier setup/hold time violation occurs). However its value might be wrong due to meta-stability of the first flip-flop. But it increases the time the signal has to settle to the correct state.

There can be a time where these designs fail, that's correct. However, all circuits that transfer data between clock domains suffer from this. Guess how FIFOs synchronize the control signals between clock domains: they use chains of flip-flops. But: don't be fooled by the failure part in mean time between failure. This does only mean that the settling did not happen in time. It does not mean that the design misbehaves.

FIFOs usually employ gray counters (among other techniques) where a failure would simply mean that the receiving side does not see that data was written for one more clock cycle than necessary. It would not imply data loss.

If you wanted to employ a FIFO solution, you would need to have synchronous inputs to begin with, because how else are they already in a clock domain? You would then have to synchronize to some clock domain A before moving the data from A to B. So in effect, this pushes the problem of synchronization just before the FIFO.

If you really wanted, you could capture the signals using a delayed PHI clock from the EDGE connector, but that has its own problems: PHI is sometimes stopped (so deriving an internal clock from it won't work), the #WR signal is synchronous to PHI * 4 (the system clock) and does toggle with edges of PHI itself, so you would have to use double data rate flip flops and use two phase-shifted versions of PHI.

You can however oversample PHI and #WR and use their state changes to know when to sample the address and data buses (because they must be stable when these signals go low).

As for MAXDELAY, I think the first object does not have to be a register for the constraint to work, but I haven't read up on it in detail too much. You would need to specify pin as source and the sWR(0) etc. registers as destination. For the delay choose something reasonable that's less than your clock period and will constraint the first stage of your synchronizer to the IO flip-flop.

I suggest to put the same delay constraint on all input signals for now and look at the timing report how the final timing turns out and go from there.

Last edited by Tauwasser (2020-05-09 06:30:33)

Offline

#103 2020-05-09 02:49:56

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

As the one user in the forum wrote, it's usually best to simply use on the IOB (I/O buffer) flip flops to capture any incoming signal and then put a number of flip flops to synchronize the signals after it.

I have a few questions about this...shocking, I know.

1. Is this the same thing as 'buffering inputs'? Is that also known as 'Registering Inputs'? I'm struggling with just the terminology alone.

2. What is the result of this buffering? Does it just add another register? If so, doesn't my synchronizer accomplish the same thing?

3. I think I read that an IOB flip-flop is different from a standard flip-flop (regarding timing?). If this is true, how are they different?

4. The MachXO FPGA has a feature called sysIO buffers. This is completely unrelated to IOB, or am I mistaken?

5. Should I be buffering my outputs as well?

I found this section in Lattice's FPGA Design Guide where it mentions Xilinx's IBUF primitive, and the equivalent Lattice primitive, named IB.

I then found a VHDL code example for declaring an Input Buffer using the IB primitive (the example isn't for the MachXO device but it should hopefully be similar).

Am I on the right track with this? Or am I going way off course?

Tauwasser wrote:

As for MAXDELAY, I think the first object does not have to be a register for the constraint to work, but I haven't read up on it in detail too much. You would need to specify pin as source and the sWR(0) etc. registers as destination. For the delay choose something reasonable that's less than your clock period and will constraint the first stage of your synchronizer to the IO flip-flop. I suggest to put the same delay constraint on all input signals for now and look at the timing report how the final timing turns out and go from there.

I created a group of all my inputs, named Input. Then I made another group from the first flip-flops of all my synchronizers, called InputSync.

I don't know what the frequency of my clock is, but the datasheet says it is NOT adjustable, and will be between 18 and 26 MHz. So I input the frequency for SYS_CLK at 26 MHz, and the Period at 38.5 ns.

Then I added a MAXDELAY constraint of 20 ns From "Input" to "InputSync".

The timing analysis didn't return any errors. Is there anything else I should be looking for in the analysis report?

Last edited by WeaselBomb (2020-05-09 03:22:30)

Offline

#104 2020-05-09 07:06:18

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Hey WeaselBomb,

WeaselBomb wrote:

I have a few questions about this...shocking, I know.

That's fine.

WeaselBomb wrote:

1. Is this the same thing as 'buffering inputs'? Is that also known as 'Registering Inputs'? I'm struggling with just the terminology alone.

In the context of FPGAs, buffering and registering inputs should almost exclusively refer to the same thing: capturing the pin input signal using a register close to the pin.

In other context, especially mixed digital/analog systems (e.g. ADCs), buffering an input might also refer to inserting a driver IC into the signal path that drives the same voltage but is capable of delivering higher current than the original source.

FPGAs also contain buffers, i.e. driver circuits, on the inputs. These are usually configurable for drive strength (current), I/O standard (voltage, thresholds), slew rate (slope steepness) using the constraints file as well.

There might be other features as well such as pull-up/pull-down circuits, differential termination etc.

WeaselBomb wrote:

2. What is the result of this buffering? Does it just add another register? If so, doesn't my synchronizer accomplish the same thing?

Yes, the first register of your synchronizer accomplishes this task. However, without reasonable constraints, the place & route operation might not actually use one of the dedicated input buffers of the FPGA to implement the first register of your synchronizer. Instead, it might use any register within the FPGA and just route the signal from pin all the way there.

WeaselBomb wrote:

3. I think I read that an IOB flip-flop is different from a standard flip-flop (regarding timing?). If this is true, how are they different?

It depends entirely on the manufacturer. Their main feature is that they are close to the actual pin.

Usually they also have dual data rate (DDR) support (so they can be clocked on both edges of a clock and deliver different data), whereas most of the rest of the FPGA might only have single data rate (SDR) support.

Some FPGAs can delay signals in the buffer or a close-by block to help with timing closure or have native serializer-deserializer circuits (SerDes), etc.

WeaselBomb wrote:

4. The MachXO FPGA has a feature called sysIO buffers. This is completely unrelated to IOB, or am I mistaken?

The sysIO buffers (see MachXO sysIO Usage Guide) describes the actual analog buffers of the MachXO. You will see they can be configured for differential termination, voltage standards etc.

The structure Xilinx calls an IOB (Input/Output Block; I wrongly said I/O Buffer here before) are the registers and other helper blocks (delay blocks, SerDes blocks) mentioned above. It is an old term used in older FPGAs, but it has stuck around.

WeaselBomb wrote:

5. Should I be buffering my outputs as well?

It's a good idea to buffer inputs as well as outputs in a design if your timing allows for it. That way you will have very precise output timings as the output path (last register to output pin) will contain no combinational logic. If you don't buffer these signals, the timing constraint will cover this combinational logic as well. However, with the relatively slow Game Boy timings and the high speed of the MachXO fabric, it should not be strictly necessary to buffer your output signals.

WeaselBomb wrote:

I found this section in Lattice's FPGA Design Guide where it mentions Xilinx's IBUF primitive, and the equivalent Lattice primitive, named IB.

http://i.imgur.com/rjEbvApm.png

I then found a VHDL code example for declaring an Input Buffer using the IB primitive (the example isn't for the MachXO device but it should hopefully be similar).

Am I on the right track with this? Or am I going way off course?

You're on the right track. You can look into the FPGA Libraries Reference Guide 3.11 to see what primitives are available for your FPGA. A word of caution however: Using FPGA- or vendor-specific primitives enable powerful features and better control (due to no inference from VHDL being required). However, this means that jumping FPGAs or vendors becomes harder, because your design contains elements that might not have a 1:1 corresponding element in the new target. In principle, you could use primitives for every register. However, it's better to have a more generic design and let the vendor tool infer from the VHDL what you want.

Having said that, I have used FPGA-/vendor-specific primitives in the past, because it was the only way to use some FPGA features as inference from VHDL just did not work.

I found it preferable to use inference as much as possible and later constraint placement of inferred elements to the required primitives if timing constraints did not cut it.

But you're definitely on the right track and finding relevant information! Just don't switch to using primitives only for your design willy-nilly ![]() .

.

WeaselBomb wrote:

I created a group of all my inputs, named Input. Then I made another group from the first flip-flops of all my synchronizers, called InputSync. [...] Then I added a MAXDELAY constraint of 20 ns From "Input" to "InputSync".

The timing analysis didn't return any errors. Is there anything else I should be looking for in the analysis report?

Sounds good to me. If your timing report contains the relevant paths, you should look if the results are as expected. Sometimes you think a constraint covers a specific path, but later find out that it did not usin the Timing Analysis Report.

WeaselBomb wrote:

I don't know what the frequency of my clock is, but the datasheet says it is NOT adjustable, and will be between 18 and 26 MHz. So I input the frequency for SYS_CLK at 26 MHz, and the Period at 38.5 ns.

Ah, I think I read the MachXO2 datasheet before, because I was confused about which part you were using.

I found this FAQ entry that states as much: the MachXO oscillator cannot be configured. The MachXO sysCLOCK Design and Usage Guide talks a bit about the oscillator and the ways clocks can be routed inside the MachXO.

I think you will need to experiment a bit if you want to hit your power target for the RTC application. Assuming 18 MHz, even dividing by 4 (using two toggle flip flops) will leave you with 4.5 MHz, which is enough to oversample all your input signals comfortably. A delay of ~222ns of the control signals should also be acceptable for your other components (SRAM, FLASH).

Offline

#105 2020-05-13 21:18:37

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

I think you will need to experiment a bit if you want to hit your power target for the RTC application.

I agree. I'm tempted to just use an external crystal on the PCB, if there is room for it. I'd like to keep power consumption below 85 mA, if possible. I think that's close to how much power Pokemon Gold and Silver draw, if I remember correctly.

I think I've narrowed down a problem to my RA outputs. My RA equation is:

Code:

RA <= "00" when (sADDR14(1) = '0' or RESET = '0') else RomBankReg when (RomBankReg /= "00") else "01";

The problem is that games do not boot with this equation. When I change it to use A14 BEFORE it is synchronized, it seems to boot just fine (but it will still crash randomly).

So that's the current thing I'm trying to figure out. I still don't know what other timing constraints are necessary, like CLOCK_TO_OUTPUT. And if other constraints are necessary, I'm not sure how to find those values yet. Once I figure out the timing for the entire RA path, I imagine this design should work...

I have a stupid question, I don't need to synchronize RESET, right? I wouldn't think so, because it's being used as an asynchronous reset anyway.

EDIT:

it sounds like CLOCK_TO_OUT is irrelevant for my use case. every explanation says it is either used to constrain system synchronous (when the clock is external), or source synchronous (when the clock is generated with the data) output paths. But, as you said earlier, I am capturing data asynchronously.

I'm kinda stumped at the moment. I don't know what else to constrain besides MAXDELAY for my outputs.

Last edited by WeaselBomb (2020-05-14 13:49:24)

Offline

#106 2020-05-14 16:15:51

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

WeaselBomb wrote:

I think I've narrowed down a problem to my RA outputs. My RA equation is:

Code:

RA <= "00" when (sADDR14(1) = '0' or RESET = '0') else RomBankReg when (RomBankReg /= "00") else "01";The problem is that games do not boot with this equation. When I change it to use A14 BEFORE it is synchronized, it seems to boot just fine (but it will still crash randomly).

Are we talking about a cold boot here, or is the cartridge already powered but you pulled reset low for some time?

If it's the former, the problem might be related to the time it takes for the MachXO to configure or the oscillator to start up. Lattice claim a "instant-on" power-up in microseconds, but I don't find exact numbers on either time until configuration is over after power-up nor oscillator start-up time.

If push comes to shove, you can try holding reset manually (using a switch or something) while the cartridge powers up for a bit. I would imagine the Game Boy to be slower in starting than the MachXO, but I don't have any numbers on that either :-/

WeaselBomb wrote:

I have a stupid question, I don't need to synchronize RESET, right? I wouldn't think so, because it's being used as an asynchronous reset anyway.

Theoretically you should. There is a recommended design (by Xilinx) that uses an asynchronously reset shift register of some depth to generate a synchronous reset for all parts of the design.

With an asynchronous reset, you can have uncertainty of one clock cycle between parts of the design, i.e. some parts start a little bit before other parts and depending on the design, this might cause issues.

WeaselBomb wrote:

So that's the current thing I'm trying to figure out. I still don't know what other timing constraints are necessary, like CLOCK_TO_OUTPUT. And if other constraints are necessary, I'm not sure how to find those values yet. Once I figure out the timing for the entire RA path, I imagine this design should work...

EDIT:

it sounds like CLOCK_TO_OUT is irrelevant for my use case. every explanation says it is either used to constrain system synchronous (when the clock is external), or source synchronous (when the clock is generated with the data) output paths. But, as you said earlier, I am capturing data asynchronously.

I'm kinda stumped at the moment. I don't know what else to constrain besides MAXDELAY for my outputs.

Well, I'm not sure what to tell you. The CLOCK_TO_OUTPUT should constrain the path from last flip flop to output (because it would be the same for a source synchronous design). Maybe I misunderstood the constraint from the Lattice documentation if it does not work that way. That's one of the many pitfalls of hardware description: every toolchain and vendor does things slightly differently.

If you look at the Timing Report, do you see your output paths and their individual delays? Are they within a certain margin to each other that you're comfortable with? If so, I wouldn't stress about this too much until an issue arises, as the FPGA fabric is plenty fast compared to FLASH chips or the Game Boy clock.

EDIT:

I also just noticed that you initialize sWR to the wrong value. You should initialize it to all ones instead of zeros or else your logic is going to see a write as the first operation (until you got real data from the #WR pin after two clock cycles).

Last edited by Tauwasser (2020-05-14 16:31:34)

Offline

#107 2020-05-14 21:49:31

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

I also just noticed that you initialize sWR to the wrong value. You should initialize it to all ones instead of zeros or else your logic is going to see a write as the first operation (until you got real data from the #WR pin after two clock cycles).

Woops, yeah I fixed that after it was causing some problems. Here's what I'm working with now:

Code:

library IEEE;

library MACHXO;

use MACHXO.ALL;

use IEEE.STD_LOGIC_1164.ALL;

entity MBCx is

port

(

RD : in std_logic;

WR : in std_logic;

AIN : in std_logic;

ADDR : in std_logic_vector(15 downto 13);

DATA : in std_logic_vector(1 downto 0);

RESET : in std_logic;

RA : out std_logic_vector(15 downto 14);

OE : out std_logic

);

end MBCx;

architecture Behavioral of MBCx is

-- OSCILLATOR

COMPONENT OSCC

PORT(OSC : OUT std_logic);

END COMPONENT;

-- SYSTEM CLOCK

signal SYS_CLK : std_logic; --everything will be synchronized to this signal

-- INPUT SYNCHRONIZERS

signal sWR : std_logic_vector(1 downto 0);

signal sRD : std_logic_vector(1 downto 0);

signal sAIN : std_logic_vector(1 downto 0);

signal sADDR13 : std_logic_vector(1 downto 0);

signal sADDR14 : std_logic_vector(1 downto 0);

signal sADDR15 : std_logic_vector(1 downto 0);

signal sDATA0 : std_logic_vector(1 downto 0);

signal sDATA1 : std_logic_vector(1 downto 0);

-- REGISTERS

signal RomBankReg : std_logic_vector(1 downto 0);

-- CLOCKS

signal RomBankClk : std_logic;

signal LastRomBankClk : std_logic;

begin

osc0 : OSCC

Port Map (OSC => SYS_CLK);

RomBankClk <=

'0' when (sWR(1) = '0' and sADDR15(1) = '0' and sADDR14(1) = '0' and sADDR13(1) = '1')

else '1';

RA <=

"00" when (sADDR14(1) = '0' or RESET = '0')

else RomBankReg when (RomBankReg /= "00")

else "01";

OE <=

'0' when (sRD(1) = '0' or sWR(1) = '0' or sAIN(1) = '0') and sADDR15(1) = '0'

else '1';

SyncInputs : process(RESET, SYS_CLK)

begin

if(RESET = '0') then

sRD <= "11";

sWR <= "11";

sAIN <= "11";

sADDR13 <= "11";

sADDR14 <= "11";

sADDR15 <= "11";

LastRomBankClk <= '1';

elsif(rising_edge(SYS_CLK)) then

-- synchronize WR

sWR(0) <= WR;

sWR(1) <= sWR(0);

sRD(0) <= RD;

sRD(1) <= sRD(0);

sAIN(0) <= AIN;

sAIN(1) <= sAIN(0);

-- synchronize ADDR

sADDR15(0) <= ADDR(15);

sADDR15(1) <= sADDR15(0);

sADDR14(0) <= ADDR(14);

sADDR14(1) <= sADDR14(0);

sADDR13(0) <= ADDR(13);

sADDR13(1) <= sADDR13(0);

-- synchronize DATA

sDATA0(0) <= DATA(0);

sDATA0(1) <= sDATA0(0);

sDATA1(0) <= DATA(1);

sDATA1(1) <= sDATA1(0);

LastRomBankClk <= RomBankClk;

end if;

end process SyncInputs;

RomBank : process(RESET, SYS_CLK)

begin

if (RESET = '0') then

RomBankReg <= "00";

elsif (rising_edge(SYS_CLK)) then

if(LastRomBankClk = '0' and RomBankClk = '1') then

RomBankReg(1) <= sDATA1(1);

RomBankReg(0) <= sDATA0(1);

end if;

end if;

end process RomBank;

end Behavioral;Offline

#108 2020-05-17 19:36:53

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Well I've spent a few days (10?) trying to figure out this timing thing, and I feel like I've gotten nowhere. The game boots on Gameboy Color, but not DMG. Even though it will boot on GBC, it still resets intermittently. Changing my RA equation to use ADDR(14) instead of sADDR14(1) allows it to boot on DMG, but still experiences the same reset issue.

I have a Period constraint of 38 ns defined, which covers 80% of the design.

I also have a MAXDELAY constraint of 30 ns from input to first register defined.

I've tried buffering RA, which applied the Period constraint between sADDR14 and RA, still no luck.

I don't have a CLOCK TO OUT constraint defined, because I'm not sure what the value should be. Trying to find good examples for this constraint is like hunting for unicorns, they just don't exist.

Either way, RomBankReg and sADDR14 both reach RA within 5 ns. At that speed, I have no clue why it won't work.

At this point, I'm experimenting with other designs. I had another look at some timing charts and noted that the Address bus is stable by the time A15 is asserted. If it's stable, I shouldn't have to synchronize anything, because setup & hold times should never be violated. So I played around with using the falling edge of A15 as a clock for ROM access:

Code:

RomRead : process(RESET, ROMCS)

begin

if(RESET = '0') then

regRA <= "00";

elsif(falling_edge(ROMCS)) then

if(RD = '0') then

regRA(1) <= RomBankReg(1) and ADDR(14);

regRA(0) <= (RomBankReg(0) or not(RomBankReg(1) or RomBankReg(0))) and ADDR(14);

end if;

end if;

end process RomRead;Setting the rom bank would work similarly to before, using the same glitch filtering. I would use ADDR(14) and ADDR(13) as a clock enable:

Code:

MBC_WriteClk <=

'0' when (ROMCS = '0' and WR = '0')

else '1';

WriteMBC : process(RESET, MBC_WriteClk)

begin

if(RESET = '0') then

RomBankReg <= "00";

elsif(rising_edge(MBC_WriteClk)) then

if(ADDR = "01") then -- 0x2000 - 0x3FFF

RomBankReg(1) <= DATA(1);

RomBankReg(0) <= DATA(0);

end if;

end if;

end process WriteMBC;But I guess this has its own problems. Doesn't the Clock Enable have to arrive before the clock edge?

Overall, this idea had some success, the game flashed and booted, but there were glitches. It is probably bad design since it isn't synchronous.

I'm really not sure what I'm doing wrong with the synchronous approach. The CPLD was nice and easy with its logic gates. The FPGA and its LUTs are migraine material. Honestly, I'm not sure I will ever figure this out.

Last edited by WeaselBomb (2020-05-18 17:01:43)

Offline

#109 2020-05-18 19:37:20

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Honestly, I'm not sure why it wouldn't work. The logic is simple enough. I suspect there is a different flaw at play here that's outside of the actual MachXO.

I know it sucks, but did you double and triple check every solder joint? You did enable pull-ups inside the MachXO, right? The capacitors around the MachXO and Voltage Translators are all populated and the required values, right? Did you change some connections (AIN, #WE?) using wires or was that a different board?

One thing you can try (and which the original MBCs do, too) is that you include RESET in the OE logic. Usually, during RESET the ROM is accessible for reading. However, because you require the clock to be running and the addresses (A15) to match, this isn't the case with your code. Maybe the GBC just takes longer to boot and it really is a timing issue with configuration and when the DMG starts running?

Are the intermittent resets actual resets, i.e. Game Boy logo and everything or just the game crashing and booting up again with weird colors? Just wanting to double check if you really do get spurious resets.

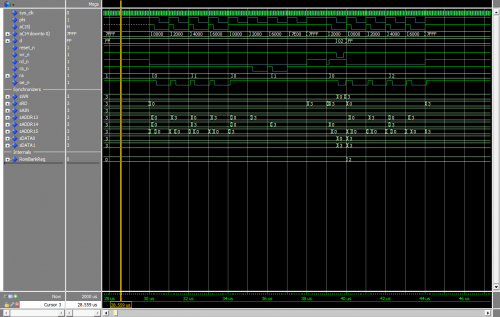

Just to double check, I did create a little test bench and ran Modelsim with your design:

As you can see, everything works as expected. Because I did not have the Lattice library, I created my own 18 MHz clock and fed it into your design.

My testbench waits for 10us, then holds RESET_N low (asserted) for another 10us, then begins by reading from 0x0000, 0x2000, ... etc. The 0x7FFF part is a bus hold pattern, basically all pins go to high and PHI is stopped for a certain amount of time. Then a single write of 0x02 to 0x2000, another cascade of reads again, ROM bank change is visible as expected. Delays due to oversampling are ~0.1us from actual state of GEC until the OE_N pin is asserted by your design.

EDIT: Got curious and installed Lattice Diamond. I compiled their MachXO library and used it for simulation. However, their OSCC model is really just a simple clock process and does not simulate start-up times or anything. Curiously, they produce a square wave at 20 MHz (1 / 50 ns) in their OSCC model.

Last edited by Tauwasser (2020-05-18 20:27:39)

Offline

#110 2020-05-22 10:43:34

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Tauwasser wrote:

I know it sucks, but did you double and triple check every solder joint? You did enable pull-ups inside the MachXO, right? The capacitors around the MachXO and Voltage Translators are all populated and the required values, right? Did you change some connections (AIN, #WE?) using wires or was that a different board?

Actually, everything is still on the breadboard. The FPGA is soldered to a breadboard adapter, but its connections pass continuity testing with my multimeter. All pull-ups are enabled, and the decoupling caps are all present. It's very possible that the breadboard is just unreliable. I think I'm confident enough to try it on a PCB, what do you think?

If I do assemble a PCB, I had a couple questions about the oscillator. I definitely agree the frequency needs to be divided to save power, but how fast should it run? I know you said dividing it twice (to 4.5 MHz) would be plenty fast enough, but does this still work with GBC double speed mode? From what I've read, as long as I sample above the Nyquist rate (double the frequency of my inputs), it should be ok? And double speed mode runs just over 2 MHz, if I'm not mistaken? So 4.5 should still be enough to handle this, right?

That's assuming my FPGA is running as slowly as possible (18 MHz). In the "worst case" (regarding power consumption), it could be running at 26 MHz, and dividing it will only drop it to 6.5 MHz. I will have to check the power estimator to see how much power is drawn at this speed.

Speaking of power consumption, I had originally planned to use a MachXO2, but there is only one model that accepts 3.3v for VCCINT. This model uses a linear regulator to supply 1.2v, which at first seemed too inefficient for me, but I might check the power estimate in Lattice Diamond and see how much current it will draw. I really prefer the MachXO2 just because of its adjustable oscillator, but if my current oscillator is acceptable I will probably stick with that.

Or if all else fails, I could try to fit an external oscillator somewhere, but I don't think that should be necessary.

Last edited by WeaselBomb (2020-05-22 11:02:03)

Offline

#111 2020-05-23 11:33:34

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

If it's still the setup with the long cables you shot a picture of earlier, then yes, I would suspect something might be amiss there, too.

As far as the oscillator goes: yep, keep above nyquist and you should be fine. Usually you would want to have at least twice Nyquist just for safety.

Usually you don't want an integer multiple of the original frequency either but rather a rational multiple if you cannot go much higher than Nyquist; the reason being that it would be possible that the clocks align and every second sample fails setup/hold time constraints etc.

But 4.5 MHz should work nicely for both GBC modes.

As far as power estimation goes: keep in mind it really is estimation. You will need to measure it in the end to know. You might measure your design right now and compare with Lattice Diamond's power estimation of your current design to get a rough idea about the accuracy of the estimation.

Offline

#112 2020-05-25 23:07:42

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

The current draw seemed reasonable from the MachXO, even at 18 MHz or above. It seemed to fluctuate a lot, but I never saw it go above 80 mA on my multimeter. I think once the clock frequency is quartered, it will be in a great spot.

So, I think I could stick with the MachXO, but I really like the idea of the MachXO2, since it comes in a QFN 48 package. There would be a lot less wasted I/O and board space if I could pull it off.

Actually, the MachXO2 QFN-48 package only has 40 I/O, 4 of which are JTAG pins (so really 36 I/O). Lattice Diamond says I am 1 I/O over capacity with my current design, so I need to reduce my I/O count somehow.

Could I remove AIN, RD, or PHI?

AIN is only used in the OE equation, and the only time OE is asserted is when I'm accessing ROM, RAM or RTC registers. So I was thinking the OE equation could be simplified to:

Code:

OE <= '0' when (ADDR(15) = '0' or (CS = '0' and ADDR(15 downto 13) = "101")) else '1';

The only thing I'm worried about is idle states (RD = '1' and WR = '1'). Would this cause bus conflicts? I don't see how it could, since everything is memory-mapped, and ROM or RAM would be selected in this case.

For RD, I think its only purpose (besides the OE equation) is reading RTC registers. Could I just use WR = '1' instead? So if I want to read an RTC register, I would look for:

Code:

ADDR = "101" and WR = '1' and RamBankReg > "0111"

This still has the risk of bus conflicts during idle states, but I'm not sure if that's a possibility or not.

PHI is only being used to latch new addresses in the FRAM chip. The FRAM I am using latches addresses on the falling edge of /CE, so consecutive reads fail without toggling this signal in between different addresses. PHI serves this purpose well, but couldn't I use /CS from GEC pin 5? I recently learned that /CS begins every clock cycle at logic high, so couldn't this fulfill the same purpose? It would be nice to remove PHI, since it only serves one purpose, and I am already including /CS anyway. I might just have to try this out and see for myself.

I suppose a cheap and easy way to conserve I/O would be to multiplex the RA and AA signals. That would save me 4 I/O and would hopefully not be much trouble to route the traces.

Last edited by WeaselBomb (2020-05-26 18:30:42)

Offline

#113 2020-05-28 22:01:07

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

I got really annoyed when I was routing power and ground, and trying to place decoupling capacitors for the FPGA. What's sad is that I'm only using 1 cap per pin, and the datasheet recommends 2. After a couple days of research, I've reached the conclusion that using 2-layer boards comes at a cost. The copper pours for power and ground will inevitably be "cut up", and there's really no way around it.

By the time all of the components placed, this board is very crowded. The board I routed earlier (with your help of course ![]() ) is ok, but I really don't like how the RAM is grounded. It's probably about the best I can do with the space available. Also, I'd like to eventually add a supervisor circuit to perform battery switchover, for SRAM support. So it'll only get more complicated from here.

) is ok, but I really don't like how the RAM is grounded. It's probably about the best I can do with the space available. Also, I'd like to eventually add a supervisor circuit to perform battery switchover, for SRAM support. So it'll only get more complicated from here.

For these reasons, I might move to 4-layers for this design.

What do you think Tauwasser? Is a 4-layer PCB overkill for this project?

Offline

#114 2020-06-03 15:44:06

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

Hmm, while four layers are indeed better for signal integrity and routing power, I'd say it's strictly a convenience except if your CPLD/FPGA etc. requires it due to pin count, power pin placement etc. Honestly, if four layers will make your life go easier and you can take the hit in production cost, then go for it. It is hardly overkill if you're concerned about grounding at this point.

As for toggling with FRAM address latching. I think regular reads and writes behave as you expect, however CGB DMA does not. I seem to recall that gekkio has a timing diagram somewhere for CGB DMA ("new-style DMA") that shows this.

As for removing RD, I think it's a bad idea, because you would rely on address decoding alone in order to know when to read and the addresses might be valid just after write (i.e. after #WR is deasserted) when the GameBoy CPU is still driving the bus as well.

Multiplexing the AA and RA signals is an excellent way to reduce pin count before removing anything else you might need later.

You might also want to think about removing AIN from the equation and using a special register to unlock regular #WR writing to FLASH or something if pin count is to be reduced.

On the power consumption front, I'd honestly have hoped for less than 80mA draw to start with. You're at like 5 times the Supply Current as stated in the family datasheet already, which of course assumes blank device and no running clock. It's hard to gauge how much lower you could reasonably get it.

Offline

#115 2020-06-25 09:50:54

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Still working on this. I wish I had more to report on, but things have not gone well. I drew up a simple pcb with a MachXO2 and a S29AL008J70TFI020 flash for prototyping. The boards look flawless, I couldn't find any faults even under a microscope, but for some reason I can't access the flash. Both IDs return as 0xFF. Everything was soldered via hot air, so maybe I applied too much heat, but I really don't know. I thought maybe the /OE pin wasn't being driven, or address lines weren't connecting, but the multimeter says those are working fine. Maybe it was dumb to switch FPGAs and flash chips in the same test. I will probably breadboard one more time using the new chip until I'm confident in using the O2.

So yeah, just wanted to say this project isn't dead, just delayed. Again.

Offline

#116 2020-06-26 18:37:43

- Tauwasser

- Member

- Registered: 2010-10-23

- Posts: 160

Re: MBC5 in WinCUPL (problem)

It's good that you're still going strong with this one ![]() Do share your new board schematic if you want a second opinion

Do share your new board schematic if you want a second opinion ![]()

Offline

#117 2020-08-20 17:58:41

- WeaselBomb

- Member

- Registered: 2018-03-06

- Posts: 86

- Website

Re: MBC5 in WinCUPL (problem)

Well, I'm back now, and I don't know if I should be embarrassed or relieved, but those boards I soldered nearly 2 months ago actually DO work! I was so frustrated with them at the time that I almost trashed them, and I am so glad that I didn't!

They didn't appear to work before, because I made a HUGE mistake. I was prototyping with 74LVC chips, but my PCB is using 74ALVC. These chips use opposite polarity on the DIR pin! After I made a change to the flasher firmware to assert BOTH the /WR and /AIN pins when writing to flash, it worked!

I don't know how I missed this before, but after taking a break, I realized it pretty much instantly upon revisiting it. Tetris and Super Mario Land are reading, writing, and running!

Last edited by WeaselBomb (2020-08-20 18:00:30)

Offline